doctr.transforms¶

Data transformations are part of both training and inference procedure. Drawing inspiration from the design of torchvision, we express transformations as composable modules.

Supported transformations¶

Here are all transformations that are available through docTR:

- class doctr.transforms.modules.Resize(size: int | tuple[int, int], interpolation=InterpolationMode.BILINEAR, preserve_aspect_ratio: bool = False, symmetric_pad: bool = False)[source]¶

Resize the input image to the given size

>>> import torch >>> from doctr.transforms import Resize >>> transfo = Resize((64, 64), preserve_aspect_ratio=True, symmetric_pad=True) >>> out = transfo(torch.rand((3, 64, 64)))

- Parameters:

size – output size in pixels, either a tuple (height, width) or a single integer for square images

interpolation – interpolation mode to use for resizing, default is bilinear

preserve_aspect_ratio – whether to preserve the aspect ratio of the image, if True, the image will be resized to fit within the target size while maintaining its aspect ratio

symmetric_pad – whether to symmetrically pad the image to the target size, if True, the image will be padded equally on both sides to fit the target size

- class doctr.transforms.modules.GaussianNoise(mean: float = 0.0, std: float = 1.0)[source]¶

Adds Gaussian Noise to the input tensor

>>> import torch >>> from doctr.transforms import GaussianNoise >>> transfo = GaussianNoise(0., 1.) >>> out = transfo(torch.rand((3, 224, 224)))

- Parameters:

mean – mean of the gaussian distribution

std – std of the gaussian distribution

- class doctr.transforms.modules.ChannelShuffle[source]¶

Randomly shuffle channel order of a given image

- class doctr.transforms.modules.RandomHorizontalFlip(p=0.5)[source]¶

Randomly flip the input image horizontally

- class doctr.transforms.modules.RandomShadow(opacity_range: tuple[float, float] | None = None)[source]¶

Adds random shade to the input image

>>> import torch >>> from doctr.transforms import RandomShadow >>> transfo = RandomShadow((0., 1.)) >>> out = transfo(torch.rand((3, 64, 64)))

- Parameters:

opacity_range – minimum and maximum opacity of the shade

- class doctr.transforms.modules.RandomResize(scale_range: tuple[float, float] = (0.3, 0.9), preserve_aspect_ratio: bool | float = False, symmetric_pad: bool | float = False, p: float = 0.5)[source]¶

Randomly resize the input image and align corresponding targets

>>> import torch >>> from doctr.transforms import RandomResize >>> transfo = RandomResize((0.3, 0.9), preserve_aspect_ratio=True, symmetric_pad=True, p=0.5) >>> out = transfo(torch.rand((3, 64, 64)))

- Parameters:

scale_range – range of the resizing factor for width and height (independently)

preserve_aspect_ratio – whether to preserve the aspect ratio of the image,

value (given a float)

probability (the symmetric padding will be applied with this)

symmetric_pad – whether to symmetrically pad the image,

value

probability

p – probability to apply the transformation

Composing transformations¶

It is common to require several transformations to be performed consecutively.

- class doctr.transforms.modules.SampleCompose(transforms: list[Callable[[Any, Any], tuple[Any, Any]]])[source]¶

Implements a wrapper that will apply transformations sequentially on both image and target

>>> import numpy as np >>> import torch >>> from doctr.transforms import SampleCompose, ImageTransform, ColorInversion, RandomRotate >>> transfos = SampleCompose([ImageTransform(ColorInversion((32, 32))), RandomRotate(30)]) >>> out, out_boxes = transfos(torch.rand(8, 64, 64, 3), np.zeros((2, 4)))

- Parameters:

transforms – list of transformation modules

- class doctr.transforms.modules.ImageTransform(transform: Callable[[Any], Any])[source]¶

Implements a transform wrapper to turn an image-only transformation into an image+target transform

>>> import torch >>> from doctr.transforms import ImageTransform, ColorInversion >>> transfo = ImageTransform(ColorInversion((32, 32))) >>> out, _ = transfo(torch.rand(8, 64, 64, 3), None)

- Parameters:

transform – the image transformation module to wrap

- class doctr.transforms.modules.ColorInversion(min_val: float = 0.5)[source]¶

Applies the following tranformation to a tensor (image or batch of images): convert to grayscale, colorize (shift 0-values randomly), and then invert colors

>>> import torch >>> from doctr.transforms import ColorInversion >>> transfo = ColorInversion(min_val=0.6) >>> out = transfo(torch.rand(8, 64, 64, 3))

- Parameters:

min_val – range [min_val, 1] to colorize RGB pixels

- class doctr.transforms.modules.OneOf(transforms: list[Callable[[Any], Any]])[source]¶

Randomly apply one of the input transformations

>>> import torch >>> from doctr.transforms import OneOf >>> transfo = OneOf([JpegQuality(), Gamma()]) >>> out = transfo(torch.rand(1, 64, 64, 3))

- Parameters:

transforms – list of transformations, one only will be picked

- class doctr.transforms.modules.RandomApply(transform: Callable[[Any], Any], p: float = 0.5)[source]¶

Apply with a probability p the input transformation

>>> import torch >>> from doctr.transforms import RandomApply >>> transfo = RandomApply(Gamma(), p=.5) >>> out = transfo(torch.rand(1, 64, 64, 3))

- Parameters:

transform – transformation to apply

p – probability to apply

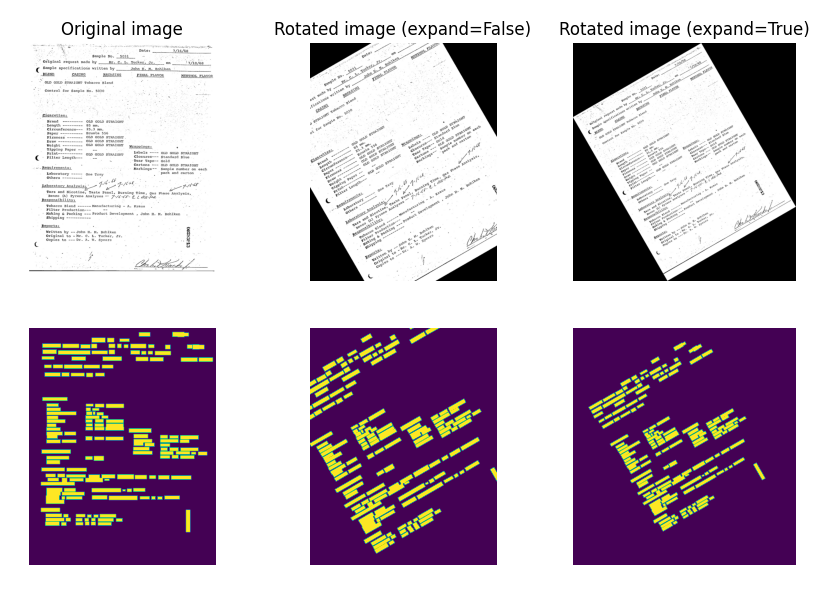

- class doctr.transforms.modules.RandomRotate(max_angle: float = 5.0, expand: bool = False)[source]¶

Randomly rotate a tensor image and its boxes

- Parameters:

max_angle – maximum angle for rotation, in degrees. Angles will be uniformly picked in [-max_angle, max_angle]

expand – whether the image should be padded before the rotation

- class doctr.transforms.modules.RandomCrop(scale: tuple[float, float] = (0.08, 1.0), ratio: tuple[float, float] = (0.75, 1.33))[source]¶

Randomly crop a tensor image and its boxes

- Parameters:

scale – tuple of floats, relative (min_area, max_area) of the crop

ratio – tuple of float, relative (min_ratio, max_ratio) where ratio = h/w