doctr.datasets¶

doctr.datasets¶

- class doctr.datasets.FUNSD(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

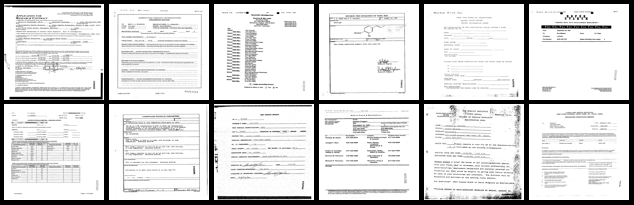

FUNSD dataset from “FUNSD: A Dataset for Form Understanding in Noisy Scanned Documents”.

>>> from doctr.datasets import FUNSD >>> train_set = FUNSD(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.SROIE(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

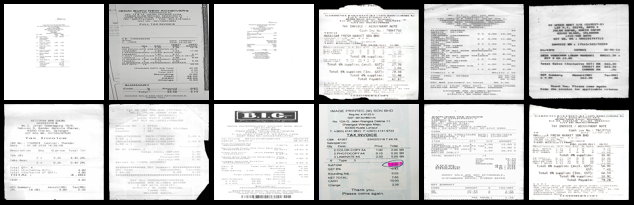

SROIE dataset from “ICDAR2019 Competition on Scanned Receipt OCR and Information Extraction”.

>>> from doctr.datasets import SROIE >>> train_set = SROIE(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.CORD(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

CORD dataset from “CORD: A Consolidated Receipt Dataset forPost-OCR Parsing”.

>>> from doctr.datasets import CORD >>> train_set = CORD(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.IIIT5K(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

IIIT-5K character-level localization dataset from “BMVC 2012 Scene Text Recognition using Higher Order Language Priors”.

>>> # NOTE: this dataset is for character-level localization >>> from doctr.datasets import IIIT5K >>> train_set = IIIT5K(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.SVT(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

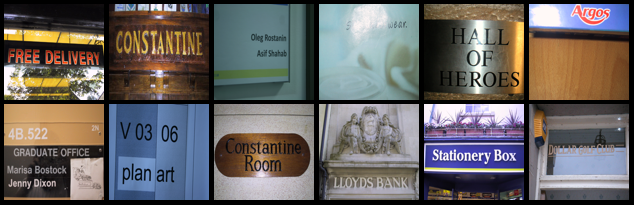

SVT dataset from “The Street View Text Dataset - UCSD Computer Vision”.

>>> from doctr.datasets import SVT >>> train_set = SVT(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.SVHN(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

SVHN dataset from “The Street View House Numbers (SVHN) Dataset”.

>>> from doctr.datasets import SVHN >>> train_set = SVHN(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.SynthText(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

SynthText dataset from “Synthetic Data for Text Localisation in Natural Images” | “repository” | “website”.

>>> from doctr.datasets import SynthText >>> train_set = SynthText(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.IC03(train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

IC03 dataset from “ICDAR 2003 Robust Reading Competitions: Entries, Results and Future Directions”.

>>> from doctr.datasets import IC03 >>> train_set = IC03(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.IC13(img_folder: str, label_folder: str, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

IC13 dataset from “ICDAR 2013 Robust Reading Competition”.

>>> # NOTE: You need to download both image and label parts from Focused Scene Text challenge Task2.1 2013-2015. >>> from doctr.datasets import IC13 >>> train_set = IC13(img_folder="/path/to/Challenge2_Training_Task12_Images", >>> label_folder="/path/to/Challenge2_Training_Task1_GT") >>> img, target = train_set[0] >>> test_set = IC13(img_folder="/path/to/Challenge2_Test_Task12_Images", >>> label_folder="/path/to/Challenge2_Test_Task1_GT") >>> img, target = test_set[0]

- Parameters:

img_folder – folder with all the images of the dataset

label_folder – folder with all annotation files for the images

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from AbstractDataset.

- class doctr.datasets.IMGUR5K(img_folder: str, label_path: str, train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

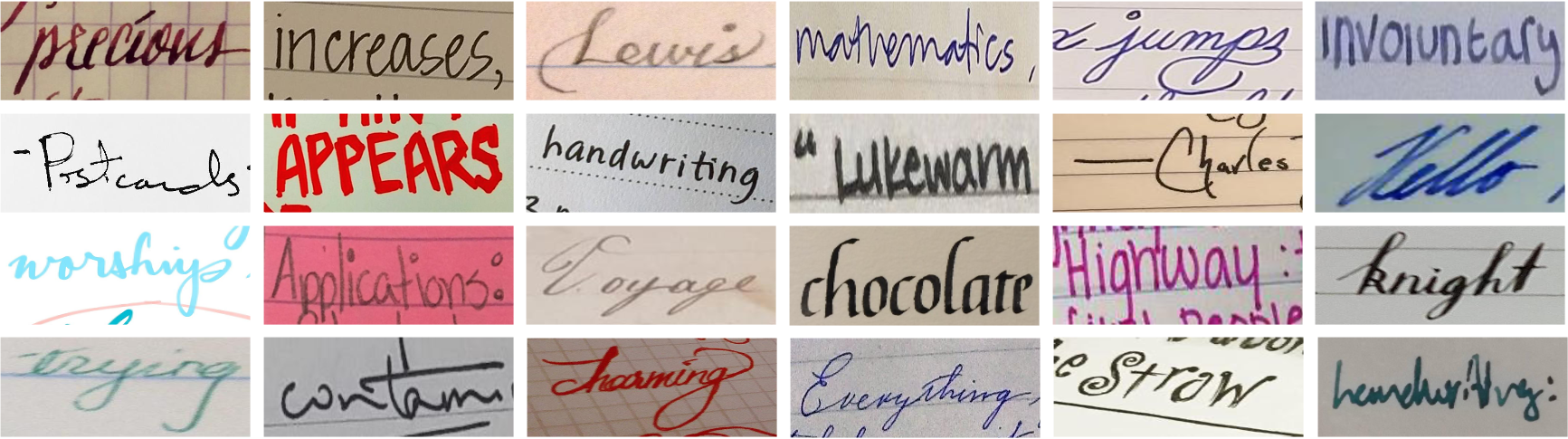

IMGUR5K dataset from “TextStyleBrush: Transfer of Text Aesthetics from a Single Example” | repository.

>>> # NOTE: You need to download/generate the dataset from the repository. >>> from doctr.datasets import IMGUR5K >>> train_set = IMGUR5K(train=True, img_folder="/path/to/IMGUR5K-Handwriting-Dataset/images", >>> label_path="/path/to/IMGUR5K-Handwriting-Dataset/dataset_info/imgur5k_annotations.json") >>> img, target = train_set[0] >>> test_set = IMGUR5K(train=False, img_folder="/path/to/IMGUR5K-Handwriting-Dataset/images", >>> label_path="/path/to/IMGUR5K-Handwriting-Dataset/dataset_info/imgur5k_annotations.json") >>> img, target = test_set[0]

- Parameters:

img_folder – folder with all the images of the dataset

label_path – path to the annotations file of the dataset

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from AbstractDataset.

- class doctr.datasets.MJSynth(img_folder: str, label_path: str, train: bool = True, **kwargs: Any)[source]¶

MJSynth dataset from “Synthetic Data and Artificial Neural Networks for Natural Scene Text Recognition”.

>>> # NOTE: This is a pure recognition dataset without bounding box labels. >>> # NOTE: You need to download the dataset. >>> from doctr.datasets import MJSynth >>> train_set = MJSynth(img_folder="/path/to/mjsynth/mnt/ramdisk/max/90kDICT32px", >>> label_path="/path/to/mjsynth/mnt/ramdisk/max/90kDICT32px/imlist.txt", >>> train=True) >>> img, target = train_set[0] >>> test_set = MJSynth(img_folder="/path/to/mjsynth/mnt/ramdisk/max/90kDICT32px", >>> label_path="/path/to/mjsynth/mnt/ramdisk/max/90kDICT32px/imlist.txt") >>> train=False) >>> img, target = test_set[0]

- Parameters:

img_folder – folder with all the images of the dataset

label_path – path to the file with the labels

train – whether the subset should be the training one

**kwargs – keyword arguments from AbstractDataset.

- class doctr.datasets.IIITHWS(img_folder: str, label_path: str, train: bool = True, **kwargs: Any)[source]¶

IIITHWS dataset from “Generating Synthetic Data for Text Recognition” | “repository” | “website”.

>>> # NOTE: This is a pure recognition dataset without bounding box labels. >>> # NOTE: You need to download the dataset. >>> from doctr.datasets import IIITHWS >>> train_set = IIITHWS(img_folder="/path/to/iiit-hws/Images_90K_Normalized", >>> label_path="/path/to/IIIT-HWS-90K.txt", >>> train=True) >>> img, target = train_set[0] >>> test_set = IIITHWS(img_folder="/path/to/iiit-hws/Images_90K_Normalized", >>> label_path="/path/to/IIIT-HWS-90K.txt") >>> train=False) >>> img, target = test_set[0]

- Parameters:

img_folder – folder with all the images of the dataset

label_path – path to the file with the labels

train – whether the subset should be the training one

**kwargs – keyword arguments from AbstractDataset.

- class doctr.datasets.DocArtefacts(train: bool = True, use_polygons: bool = False, **kwargs: Any)[source]¶

Object detection dataset for non-textual elements in documents. The dataset includes a variety of synthetic document pages with non-textual elements.

>>> from doctr.datasets import DocArtefacts >>> train_set = DocArtefacts(train=True, download=True) >>> img, target = train_set[0]

- Parameters:

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

**kwargs – keyword arguments from VisionDataset.

- class doctr.datasets.WILDRECEIPT(img_folder: str, label_path: str, train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

WildReceipt dataset from “Spatial Dual-Modality Graph Reasoning for Key Information Extraction” | “repository”.

>>> # NOTE: You need to download the dataset first. >>> from doctr.datasets import WILDRECEIPT >>> train_set = WILDRECEIPT(train=True, img_folder="/path/to/wildreceipt/", >>> label_path="/path/to/wildreceipt/train.txt") >>> img, target = train_set[0] >>> test_set = WILDRECEIPT(train=False, img_folder="/path/to/wildreceipt/", >>> label_path="/path/to/wildreceipt/test.txt") >>> img, target = test_set[0]

- Parameters:

img_folder – folder with all the images of the dataset

label_path – path to the annotations file of the dataset

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from AbstractDataset.

- class doctr.datasets.COCOTEXT(img_folder: str, label_path: str, train: bool = True, use_polygons: bool = False, recognition_task: bool = False, detection_task: bool = False, **kwargs: Any)[source]¶

COCO-Text dataset from “COCO-Text: Dataset and Benchmark for Text Detection and Recognition in Natural Images” | “homepage”.

>>> # NOTE: You need to download the dataset first. >>> from doctr.datasets import COCOTEXT >>> train_set = COCOTEXT(train=True, img_folder="/path/to/coco_text/train2014/", >>> label_path="/path/to/coco_text/cocotext.v2.json") >>> img, target = train_set[0] >>> test_set = COCOTEXT(train=False, img_folder="/path/to/coco_text/train2014/", >>> label_path = "/path/to/coco_text/cocotext.v2.json") >>> img, target = test_set[0]

- Parameters:

img_folder – folder with all the images of the dataset

label_path – path to the annotations file of the dataset

train – whether the subset should be the training one

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

recognition_task – whether the dataset should be used for recognition task

detection_task – whether the dataset should be used for detection task

**kwargs – keyword arguments from AbstractDataset.

Synthetic dataset generator¶

- class doctr.datasets.CharacterGenerator(*args, **kwargs)[source]¶

Implements a character image generation dataset

>>> from doctr.datasets import CharacterGenerator >>> ds = CharacterGenerator(vocab='abdef', num_samples=100) >>> img, target = ds[0]

- Parameters:

vocab – vocabulary to take the character from

num_samples – number of samples that will be generated iterating over the dataset

cache_samples – whether generated images should be cached firsthand

font_family – font to use to generate the text images

img_transforms – composable transformations that will be applied to each image

sample_transforms – composable transformations that will be applied to both the image and the target

- class doctr.datasets.WordGenerator(vocab: str, min_chars: int, max_chars: int, num_samples: int, cache_samples: bool = False, font_family: str | list[str] | None = None, img_transforms: Callable[[Any], Any] | None = None, sample_transforms: Callable[[Any, Any], tuple[Any, Any]] | None = None)[source]¶

Implements a character image generation dataset

>>> from doctr.datasets import WordGenerator >>> ds = WordGenerator(vocab='abdef', min_chars=1, max_chars=32, num_samples=100) >>> img, target = ds[0]

- Parameters:

vocab – vocabulary to take the character from

min_chars – minimum number of characters in a word

max_chars – maximum number of characters in a word

num_samples – number of samples that will be generated iterating over the dataset

cache_samples – whether generated images should be cached firsthand

font_family – font to use to generate the text images

img_transforms – composable transformations that will be applied to each image

sample_transforms – composable transformations that will be applied to both the image and the target

Custom dataset loader¶

- class doctr.datasets.DetectionDataset(img_folder: str, label_path: str, use_polygons: bool = False, **kwargs: Any)[source]¶

Implements a text detection dataset

>>> from doctr.datasets import DetectionDataset >>> train_set = DetectionDataset(img_folder="/path/to/images", >>> label_path="/path/to/labels.json") >>> img, target = train_set[0]

- Parameters:

img_folder – folder with all the images of the dataset

label_path – path to the annotations of each image

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

**kwargs – keyword arguments from AbstractDataset.

- class doctr.datasets.RecognitionDataset(img_folder: str, labels_path: str, **kwargs: Any)[source]¶

Dataset implementation for text recognition tasks

>>> from doctr.datasets import RecognitionDataset >>> train_set = RecognitionDataset(img_folder="/path/to/images", >>> labels_path="/path/to/labels.json") >>> img, target = train_set[0]

- Parameters:

img_folder – path to the images folder

labels_path – path to the json file containing all labels (character sequences)

**kwargs – keyword arguments from AbstractDataset.

- class doctr.datasets.OCRDataset(img_folder: str, label_file: str, use_polygons: bool = False, **kwargs: Any)[source]¶

Implements an OCR dataset

>>> from doctr.datasets import OCRDataset >>> train_set = OCRDataset(img_folder="/path/to/images", >>> label_file="/path/to/labels.json") >>> img, target = train_set[0]

- Parameters:

img_folder – local path to image folder (all jpg at the root)

label_file – local path to the label file

use_polygons – whether polygons should be considered as rotated bounding box (instead of straight ones)

**kwargs – keyword arguments from AbstractDataset.

Dataset utils¶

- doctr.datasets.translate(input_string: str, vocab_name: str, unknown_char: str = '■') str[source]¶

Translate a string input in a given vocabulary

- Parameters:

input_string – input string to translate

vocab_name – vocabulary to use (french, latin, …)

unknown_char – unknown character for non-translatable characters

- Returns:

A string translated in a given vocab

- doctr.datasets.encode_string(input_string: str, vocab: str) list[int][source]¶

Given a predefined mapping, encode the string to a sequence of numbers

- Parameters:

input_string – string to encode

vocab – vocabulary (string), the encoding is given by the indexing of the character sequence

- Returns:

A list encoding the input_string

- doctr.datasets.decode_sequence(input_seq: ndarray | Sequence[int], mapping: str) str[source]¶

Given a predefined mapping, decode the sequence of numbers to a string

- Parameters:

input_seq – array to decode

mapping – vocabulary (string), the encoding is given by the indexing of the character sequence

- Returns:

A string, decoded from input_seq

- doctr.datasets.encode_sequences(sequences: list[str], vocab: str, target_size: int | None = None, eos: int = -1, sos: int | None = None, pad: int | None = None, dynamic_seq_length: bool = False) ndarray[source]¶

Encode character sequences using a given vocab as mapping

- Parameters:

sequences – the list of character sequences of size N

vocab – the ordered vocab to use for encoding

target_size – maximum length of the encoded data

eos – encoding of End Of String

sos – optional encoding of Start Of String

pad – optional encoding for padding. In case of padding, all sequences are followed by 1 EOS then PAD

dynamic_seq_length – if target_size is specified, uses it as upper bound and enables dynamic sequence size

- Returns:

the padded encoded data as a tensor

- doctr.datasets.pre_transform_multiclass(img, target: tuple[ndarray, list]) tuple[ndarray, dict[str, list]][source]¶

Converts multiclass target to relative coordinates.

- Parameters:

img – Image

target – tuple of target polygons and their classes names

- Returns:

Image and dictionary of boxes, with class names as keys

- doctr.datasets.crop_bboxes_from_image(img_path: str | Path, geoms: ndarray) list[ndarray][source]¶

Crop a set of bounding boxes from an image

- Parameters:

img_path – path to the image

geoms – a array of polygons of shape (N, 4, 2) or of straight boxes of shape (N, 4)

- Returns:

a list of cropped images

- doctr.datasets.convert_target_to_relative(img: ImageTensor, target: ndarray | dict[str, Any]) tuple[ImageTensor, dict[str, Any] | ndarray][source]¶

Converts target to relative coordinates

- Parameters:

img – tf.Tensor or torch.Tensor representing the image

target – target to convert to relative coordinates (boxes (N, 4) or polygons (N, 4, 2))

- Returns:

The image and the target in relative coordinates

Supported Vocabs¶

Since textual content has to be encoded properly for models to interpret them efficiently, docTR supports multiple sets of vocabs.

Name |

size |

characters |

|---|---|---|

latin |

94 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ |

english |

100 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ |

albanian |

104 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿çëÇË |

afrikaans |

114 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿èëïîôûêÈËÏÎÔÛÊ |

azerbaijani |

111 |

0123456789abcdefghijklmnopqrstuvxyzABCDEFGHIJKLMNOPQRSTUVXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿çəğöşüÇƏĞÖŞÜ₼ |

basque |

104 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ñçÑÇ |

bosnian |

102 |

0123456789abcdefghijklmnoprstuvzABCDEFGHIJKLMNOPRSTUVZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿čćđšžČĆĐŠŽ |

catalan |

120 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿àèéíïòóúüçÀÈÉÍÏÒÓÚÜÇ |

croatian |

110 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ČčĆćĐ𩹮ž |

czech |

130 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿áčďéěíňóřšťúůýžÁČĎÉĚÍŇÓŘŠŤÚŮÝŽ |

danish |

106 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿æøåÆØÅ |

dutch |

114 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿áéíóúüñÁÉÍÓÚÜÑ |

estonian |

112 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿šžõäöüŠŽÕÄÖÜ |

esperanto |

105 |

0123456789abcdefghijklmnoprstuvzABCDEFGHIJKLMNOPRSTUVZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ĉĝĥĵŝŭĈĜĤĴŜŬ₷ |

french |

126 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿àâéèêëîïôùûüçÀÂÉÈÊËÎÏÔÙÛÜÇ |

finnish |

104 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿äöÄÖ |

frisian |

108 |

0123456789abcdefghijklmnoprstuvwyzABCDEFGHIJKLMNOPRSTUVWYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿âêôûúÂÊÔÛÚƒƑ |

galician |

98 |

0123456789abcdefghilmnopqrstuvxyzABCDEFGHILMNOPQRSTUVXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ñÑçÇ |

german |

108 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿äöüßÄÖÜẞ |

hausa |

101 |

0123456789abcdefghijklmnorstuwyzABCDEFGHIJKLMNORSTUWYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ɓɗƙƴƁƊƘƳ₦ |

hungarian |

114 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿áéíóöúüÁÉÍÓÖÚÜ |

icelandic |

114 |

0123456789abdefghijklmnoprstuvxyzABDEFGHIJKLMNOPRSTUVXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ðáéíóúýþæöÐÁÉÍÓÚÝÞÆÖ |

indonesian |

100 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ |

irish |

110 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿áéíóúÁÉÍÓÚ |

italian |

120 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿àèéìíîòóùúÀÈÉÌÍÎÒÓÙÚ |

latvian |

116 |

0123456789abcdefghijklmnoprstuvyzABCDEFGHIJKLMNOPRSTUVYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿āčēģīķļņšūžĀČĒĢĪĶĻŅŠŪŽ |

lithuanian |

112 |

0123456789abcdefghijklmnoprstuvyzABCDEFGHIJKLMNOPRSTUVYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ąčęėįšųūžĄČĘĖĮŠŲŪŽ |

luxembourgish |

110 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿äöüéëÄÖÜÉË |

malagasy |

94 |

0123456789abdefghijklmnoprstvyzABDEFGHIJKLMNOPRSTVYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ôñÔÑ |

malay |

100 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ |

maltese |

104 |

0123456789abdefghijklmnopqrstuvwxzABDEFGHIJKLMNOPQRSTUVWXZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ċġħżĊĠĦŻ |

maori |

84 |

0123456789aeghikmnprtuwAEGHIKMNPRTUW!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿āēīōūĀĒĪŌŪ |

montenegrin |

103 |

0123456789abcdefghijklmnoprstuvzABCDEFGHIJKLMNOPRSTUVZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿čćšžźČĆŠŚŽŹ |

norwegian |

106 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿æøåÆØÅ |

polish |

118 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ąćęłńóśźżĄĆĘŁŃÓŚŹŻ |

portuguese |

128 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿áàâãéêíïóôõúüçÁÀÂÃÉÊÍÏÓÔÕÚÜÇ |

quechua |

90 |

0123456789acehiklmnopqrstuwyACEHIKLMNOPQRSTUWY!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ñÑĉĈçÇ |

romanian |

110 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ăâîșțĂÂÎȘȚ |

scottish_gaelic |

94 |

0123456789abcdefghilmnoprstuABCDEFGHILMNOPRSTU!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿àèìòùÀÈÌÒÙ |

serbian_latin |

110 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿čćđžšČĆĐŽŠ |

slovak |

134 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ôäčďľňšťžáéíĺóŕúýÔÄČĎĽŇŠŤŽÁÉÍĹÓŔÚÝ |

slovene |

102 |

0123456789abcdefghijklmnoprstuvzABCDEFGHIJKLMNOPRSTUVZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿čćđšžČĆĐŠŽ |

somali |

94 |

0123456789abcdefghijklmnoqrstuwxyABCDEFGHIJKLMNOQRSTUWXY!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ |

spanish |

116 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿áéíóúüñÁÉÍÓÚÜÑ¡¿ |

swahili |

96 |

0123456789abcdefghijklmnoprstuvwyzABCDEFGHIJKLMNOPRSTUVWYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ |

swedish |

106 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿åäöÅÄÖ |

tagalog |

95 |

0123456789abdefghijklmnoprstuvyzABDEFGHIJKLMNOPRSTUVYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ñÑ₱ |

turkish |

113 |

0123456789abcdefghijklmnoprstuvyzABCDEFGHIJKLMNOPRSTUVYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿çğıöşüâîûÇĞİÖŞÜÂÎÛ₺ |

uzbek_latin |

110 |

0123456789abcdefghijklmnopqrstuvxyzABCDEFGHIJKLMNOPQRSTUVXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿çğɉñöşÇĞɈÑÖŞ |

vietnamese |

235 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿áàảạãăắằẳẵặâấầẩẫậđéèẻẽẹêếềểễệóòỏõọôốồổộỗơớờởợỡúùủũụưứừửữựíìỉĩịýỳỷỹỵÁÀẢẠÃĂẮẰẲẴẶÂẤẦẨẪẬĐÉÈẺẼẸÊẾỀỂỄỆÓÒỎÕỌÔỐỒỔỘỖƠỚỜỞỢỠÚÙỦŨỤƯỨỪỬỮỰÍÌỈĨỊÝỲỶỸỴ₫ |

welsh |

102 |

0123456789abcdefghijlmnoprstuwyABCDEFGHIJLMNOPRSTUWY!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿âêîôŵŷÂÊÎÔŴŶ |

yoruba |

97 |

0123456789abdefghijklmnoprstuwyABDEFGHIJKLMNOPRSTUWY!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ẹọṣẸỌṢ₦ |

zulu |

100 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿ |

russian |

114 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿₽ |

belarusian |

116 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ўiЎI₽ |

ukrainian |

114 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ґіїєҐІЇЄ₴ |

tatar |

124 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿₽ӘәҖҗҢңӨөҮү |

tajik |

125 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ҒғҚқҲҳҶҷӢӣӮӯ |

kazakh |

132 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ӘәҒғҚқҢңӨөҰұҮүҺһІі₸ |

kyrgyz |

119 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ҢңӨөҮү |

bulgarian |

107 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ |

macedonian |

119 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ЃѓЅѕЈјЉљЊњЌќЏџ |

mongolian |

128 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ӨөҮү᠐᠑᠒᠓᠔᠕᠖᠗᠘᠙₮ |

yakut |

124 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ҔҕҤҥӨөҺһҮү₽ |

serbian_cyrillic |

107 |

абвгдежзиклмнопрстуфхцчшАБВГДЕЖЗИКЛМНОПРСТУФХЦЧШJjЂђЉљЊњЋћЏџ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ |

uzbek_cyrillic |

121 |

абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~£€¥¢฿ЎўҚқҒғҲҳ |

greek |

106 |

!”#$%&’()*+,-./:;<=>?@[]^_`{|}~αβγδεζηθικλμνξοπρστςυφχψωΑΒΓΔΕΖΗΘΙΚΛΜΝΞΟΠΡΣΤΥΦΧΨΩ£€¥¢฿άέήίϊΐόύϋΰώΆΈΉΊΪΌΎΫΏ |

greek_extended |

301 |

!”#$%&’()*+,-./:;<=>?@[]^_`{|}~αβγδεζηθικλμνξοπρστςυφχψωΑΒΓΔΕΖΗΘΙΚΛΜΝΞΟΠΡΣΤΥΦΧΨΩ£€¥¢฿άέήίϊΐόύϋΰώΆΈΉΊΪΌΎΫΏͶͷϜϝἀἁἂἃἄἅἆἇἈἉἊἋἌἍἎἏἐἑἒἓἔἕἘἙἚἛἜἝἠἡἢἣἤἥἦἧἨἩἪἫἬἭἮἯἰἱἲἳἴἵἶἷἸἹἺἻἼἽἾἿὀὁὂὃὄὅὈὉὊὋὌὍὐὑὒὓὔὕὖὗὙὛὝὟὠὡὢὣὤὥὦὧὨὩὪὫὬὭὮὯὰὲὴὶὸὺὼᾀᾁᾂᾃᾄᾅᾆᾇᾈᾉᾊᾋᾌᾍᾎᾏᾐᾑᾒᾓᾔᾕᾖᾗᾘᾙᾚᾛᾜᾝᾞᾟᾠᾡᾢᾣᾤᾥᾦᾧᾨᾩᾪᾫᾬᾭᾮᾯᾲᾳᾴᾶᾷᾺᾼῂῃῄῆῇῈῊῌῒΐῖῗῚῢΰῤῥῦῧῪῬῲῳῴῶῷῸῺῼ |

hebrew |

176 |

0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~אבגדהוזחטיךכלםמןנסעףפץצקרשתְֱֲֳִֵֶַָׇֹֺֻֽ־ֿ׀ׁׂ׃ׅׄ׆׳״֑֖֛֢֣֤֥֦֧֪֚֭֮֒֓֔֕֗֘֙֜֝֞֟֠֡֨֩֫֬֯ׯװױײיִﬞײַﬠﬡﬢﬣﬤﬥﬦﬧﬨ﬩שׁשׂשּׁשּׂאַאָאּבּגּדּהּוּזּטּיּךּכּלּמּנּסּףּפּצּקּרּשּתּוֹבֿכֿפֿﭏ₪ |

arabic |

116 |

0123456789٠١٢٣٤٥٦٧٨٩ءآأؤإئابةتثجحخدذرزسشصضطظعغـفقكلمنهوىيٱپچژڢڤگکیًٌٍَُِّْٕٓٔٚ؟؛«»—،!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ |

persian |

116 |

0123456789٠١٢٣٤٥٦٧٨٩ءآأؤإئابةتثجحخدذرزسشصضطظعغـفقكلمنهوىيٱپچژڢڤگکیًٌٍَُِّْٕٓٔٚ؟؛«»—،!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ |

urdu |

124 |

0123456789٠١٢٣٤٥٦٧٨٩ءآأؤإئابةتثجحخدذرزسشصضطظعغـفقكلمنهوىيٱپچژڢڤگکیًٌٍَُِّْٕٓٔٚ؟؛«»—،!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ٹڈڑںھےہۃ |

pashto |

126 |

0123456789٠١٢٣٤٥٦٧٨٩ءآأؤإئابةتثجحخدذرزسشصضطظعغـفقكلمنهوىيٱپچژڢڤگکیًٌٍَُِّْٕٓٔٚ؟؛«»—،!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ټډړږښځڅڼېۍ |

kurdish |

121 |

0123456789٠١٢٣٤٥٦٧٨٩ءآأؤإئابةتثجحخدذرزسشصضطظعغـفقكلمنهوىيٱپچژڢڤگکیًٌٍَُِّْٕٓٔٚ؟؛«»—،!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ڵڕۆێە |

uyghur |

123 |

0123456789٠١٢٣٤٥٦٧٨٩ءآأؤإئابةتثجحخدذرزسشصضطظعغـفقكلمنهوىيٱپچژڢڤگکیًٌٍَُِّْٕٓٔٚ؟؛«»—،!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ەېۆۇۈڭھ |

sindhi |

133 |

0123456789٠١٢٣٤٥٦٧٨٩ءآأؤإئابةتثجحخدذرزسشصضطظعغـفقكلمنهوىيٱپچژڢڤگکیًٌٍَُِّْٕٓٔٚ؟؛«»—،!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ڀٿٺٽڦڄڃڇڏڌڊڍڙڳڱڻھ |

devanagari |

151 |

कखगघङचछजझञटठडढणतथदधनपफबभमयरलवशषसहऴऩळक़ख़ग़ज़ड़ढ़फ़य़ऱॺॻॼॽॾअआइईउऊऋऌऍऎएऐऑऒओऔॠॡॲऄॵॶॳॴॷॸॹ०१२३४५६७८९़ंँः॒॑ािीुूृॄॅॆेैॉॊोौॢॣॏॎ्।॥॰ऽꣲ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~₹ |

hindi |

151 |

कखगघङचछजझञटठडढणतथदधनपफबभमयरलवशषसहऴऩळक़ख़ग़ज़ड़ढ़फ़य़ऱॺॻॼॽॾअआइईउऊऋऌऍऎएऐऑऒओऔॠॡॲऄॵॶॳॴॷॸॹ०१२३४५६७८९़ंँः॒॑ािीुूृॄॅॆेैॉॊोौॢॣॏॎ्।॥॰ऽꣲ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~₹ |

sanskrit |

151 |

कखगघङचछजझञटठडढणतथदधनपफबभमयरलवशषसहऴऩळक़ख़ग़ज़ड़ढ़फ़य़ऱॺॻॼॽॾअआइईउऊऋऌऍऎएऐऑऒओऔॠॡॲऄॵॶॳॴॷॸॹ०१२३४५६७८९़ंँः॒॑ािीुूृॄॅॆेैॉॊोौॢॣॏॎ्।॥॰ऽꣲ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~₹ |

marathi |

151 |

कखगघङचछजझञटठडढणतथदधनपफबभमयरलवशषसहऴऩळक़ख़ग़ज़ड़ढ़फ़य़ऱॺॻॼॽॾअआइईउऊऋऌऍऎएऐऑऒओऔॠॡॲऄॵॶॳॴॷॸॹ०१२३४५६७८९़ंँः॒॑ािीुूृॄॅॆेैॉॊोौॢॣॏॎ्।॥॰ऽꣲ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~₹ |

nepali |

151 |

कखगघङचछजझञटठडढणतथदधनपफबभमयरलवशषसहऴऩळक़ख़ग़ज़ड़ढ़फ़य़ऱॺॻॼॽॾअआइईउऊऋऌऍऎएऐऑऒओऔॠॡॲऄॵॶॳॴॷॸॹ०१२३४५६७८९़ंँः॒॑ािीुूृॄॅॆेैॉॊोौॢॣॏॎ्।॥॰ऽꣲ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~₹ |

gujarati |

121 |

કખગઘઙચછજઝઞટઠડઢણતથદધનપફબભમયરલળવશષસહઅઆઇઈઉઊઋઌઍએઐઑઓઔ૦૧૨૩૪૫૬૭૮૯ઁંઃ઼ાિીુૂૃૄૅેૈૉોૌૢૣૺૻૼ૽૾૿્ઽ॥!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ૐ૰૱ |

bengali |

116 |

কখগঘঙচছজঝঞটঠডঢণতথদধনপফবভমযরলশষসহড়ঢ়য়ৰৱৼঅআইঈউঊঋঌএঐওঔৠৡ০১২৩৪৫৬৭৮৯ািীুূৃেৈোৌৗ্ঽৎ৽৺৻!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ঁংঃ়৳ |

tamil |

98 |

கஙசஞடணதநபமயரலவழளறனஅஆஇஈஉஊஎஏஐஒஓஔ௦௧௨௩௪௫௬௭௮௯ாிீுூெேைொோௌ்௰௱௲!”#$%&’()*+,-./:;<=>?@[]^_`{|}~௳௴௵௶௷௸௹௺ஃௐ₹ |

telugu |

119 |

కఖగఘఙచఛజఝఞటఠడఢణతథదధనపఫబభమయరఱలళవశషసహఴఅఆఇఈఉఊఋఌఎఏఐఒఓఔౠౡ౦౧౨౩౪౫౬౭౮౯౸౹౺౻ాిీుూృౄెేైొోౌౢౣ్ఽ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ఁంః₹ |

kannada |

114 |

ಕಖಗಘಙಚಛಜಝಞಟಠಡಢಣತಥದಧನಪಫಬಭಮಯರಲವಶಷಸಹಳಅಆಇಈಉಊಋॠಌೡಎಏಐಒಓಔ೦೧೨೩೪೫೬೭೮೯ಾಿೀುೂೃೄೆೇೈೊೋೌ್।॥ೱೲ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ಂಃಁ₹ |

sinhala |

113 |

කඛගඝඞචඡජඣඤටඨඩඪණතථදධනපඵබභමයරලවශෂසහළෆඅආඇඈඉඊඋඌඍඎඏඐඑඒඓඔඕඖ෦෧෨෩෪෫෬෭෮෯ාැෑිීුූෙේෛොෝෞ්෴!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ංඃ₹ |

malayalam |

116 |

കഖഗഘങചഛജഝഞടഠഡഢണതഥദധനപഫബഭമയരറലളഴവശഷസഹഅആഇഈഉഊഋൠഌൡഎഏഐഒഓഔ൦൧൨൩൪൫൬൭൮൯ാിീുൂൃൄൢൣെേൈൊോൌ്!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ഃ൹ഽ൏ം₹ |

punjabi |

112 |

ਕਖਗਘਙਚਛਜਝਞਟਠਡਢਣਤਥਦਧਨਪਫਬਭਮਯਰਲਵਸ਼ਸਹਖ਼ਗ਼ਜ਼ਫ਼ੜਲ਼ਅਆਇਈਉਊਏਐਓਔੲੳ੦੧੨੩੪੫੬੭੮੯ਂ਼ਾਿੀੁੂੇੈੋੌੑੰੱੵ੍।॥!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ੴ₹ |

odia |

121 |

କଖଗଘଙଚଛଜଝଞଟଠଡଢଣତଥଦଧନପଫବଭମଯରଲଳଵଶଷସହୟୱଡ଼ଢ଼ଅଆଇଈଉଊଋଌଏଐଓଔୡୠ୦୧୨୩୪୫୬୭୮୯୲୳୴୵୶୷ାିୀୁୂୃୄେୈୋୌୢୣ୍ଽ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ଂଃଁ଼୰₹ |

khmer |

134 |

កខគឃងចឆជឈញដឋឌឍណតថទធនបផពភមយរលវឝឞសហឡអឣឤឥឦឧឨឩឪឫឬឭឮឯឰឱឲឳ០១២៣៤៥៦៧៨៩ាិីឹឺុូួើឿៀេែៃោៅ្ំះៈ៉៊់៌៍៎៏័៑៓៝។៕៖៘៙៚ៗៜ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~៛ |

armenian |

131 |

ԱԲԳԴԵԶԷԸԹԺԻԼԽԾԿՀՁՂՃՄՅՆՇՈՉՊՋՌՍՎՏՐՑՒՓՔՕՖՙՠաբգդեզէըթժիլխծկհձղճմյնշոչպջռսվտրցւփքօֆևֈ0123456789!”#$%&’()*+,-./:;<=>?@[]^_`{|}~՚՛՜՝՞՟։֊֏ |

sudanese |

106 |

0123456789᮰᮱᮲᮳᮴᮵᮶᮷᮸᮹ᮊᮋᮌᮍᮎᮏᮐᮑᮒᮓᮔᮕᮖᮗᮘᮙᮚᮛᮜᮝᮞᮟᮠᮮᮯᮺᮻᮼᮽᮾᮿᮃᮄᮅᮆᮇᮈᮉᮀᮁᮂᮡᮢᮣᮤᮥᮦᮧᮨᮩ᮪᮫ᮬᮭ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ |

thai |

129 |

0123456789๐๑๒๓๔๕๖๗๘๙!”#$%&’()*+,-./:;<=>?@[]^_`{|}~๏๚๛ๆฯกขฃคฅฆงจฉชซฌญฎฏฐฑฒณดตถทธนบปผฝพฟภมยรฤลฦวศษสหฬอฮะาำเแโใไๅัิีึืฺุู็่้๊๋์ํ๎฿ |

lao |

124 |

0123456789໐໑໒໓໔໕໖໗໘໙!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ໆໞໟຯກຂຄຆງຈຉຊຌຍຎຏຐຑຒຓດຕຖທຘນບປຜຝພຟຠມຢຣລວຨຩສຫຬອຮະາຳຽເແໂໃໄໜໝັິີຶື຺ຸູົຼ່້໊໋໌ໍ |

burmese |

130 |

0123456789၀၁၂၃၄၅၆၇၈၉႐႑႒႓႔႕႖႗႘႙ကခဂဃငစဆဇဈဉညဋဌဍဎဏတထဒဓနပဖဗဘမယရလဝသဟဠအၐၑၒၓၔၕၚၛၜၝၡၥၦၮၯၰၵၶၷၸၹၺၻၼၽၾၿႀႁႎဣဤဥဦဧဩဪဿ့းံါာိီုူေဲဳဴဵျြွှ္်၊။၌၍၎၏ၤၗ |

javanese |

124 |

0123456789꧐꧑꧒꧓꧔꧕꧖꧗꧘꧙ꦏꦐꦑꦒꦓꦔꦕꦖꦗꦘꦙꦚꦛꦜꦝꦞꦟꦠꦡꦢꦣꦤꦥꦦꦧꦨꦩꦪꦫꦬꦭꦮꦯꦰꦱꦲꦄꦅꦆꦇꦈꦉꦊꦋꦌꦍꦎꦴꦵꦶꦷꦸꦹꦺꦻꦼꦀꦁꦂꦃ꦳ꦽꦾꦿ꧀꧈꧉꧊꧋꧌꧍ꧏ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~ |

georgian |

131 |

0123456789ႠႡႢႣႤႥႦႧႨႩႪႫႬႭႮႯႰႱႲႳႴႵႶႷႸႹႺႻႼႽႾႿჀჁჂჃჄჅჇჍაბგდევზთიკლმნოპჟრსტუფქღყშჩცძწჭხჯჰჱჲჳჴჵჶჷჸჹჺჼჽჾჿ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~჻₾ |

ethiopic |

362 |

ሀሁሂሃሄህሆሇለሉሊላሌልሎሏሐሑሒሓሔሕሖሗመሙሚማሜምሞሟሠሡሢሣሤሥሦሧረሩሪራሬርሮሯሰሱሲሳሴስሶሷሸሹሺሻሼሽሾሿቀቁቂቃቄቅቆቇቈቊቋቌቍቐቑቒቓቔቕቖቘቚቛቜቝበቡቢባቤብቦቧቨቩቪቫቬቭቮቯተቱቲታቴትቶቷቸቹቺቻቼችቾቿኀኁኂኃኄኅኆኇኈኊኋኌኍነኑኒናኔንኖኗኘኙኚኛኜኝኞኟአኡኢኣኤእኦኧከኩኪካኬክኮኯኰኲኳኴኵኸኹኺኻኼኽኾዀዂዃዄዅወዉዊዋዌውዎዏዐዑዒዓዔዕዖዘዙዚዛዜዝዞዟዠዡዢዣዤዥዦዧየዩዪያዬይዮዯደዱዲዳዴድዶዷዸዹዺዻዼዽዾዿጀጁጂጃጄጅጆጇገጉጊጋጌግጎጏጐጒጓጔጕጘጙጚጛጜጝጞጟጠጡጢጣጤጥጦጧጨጩጪጫጬጭጮጯጰጱጲጳጴጵጶጷጸጹጺጻጼጽጾጿፀፁፂፃፄፅፆፇፈፉፊፋፌፍፎፏፐፑፒፓፔፕፖፗፘፙፚᎀᎁᎂᎃᎄᎅᎆᎇᎈᎉᎊᎋᎌᎍᎎᎏ፩፪፫፬፭፮፯፰፱፲፳፴፵፶፷፸፹፺፻፼ |

japanese |

2383 |

0123456789ぁあぃいぅうぇえぉおかがきぎく…路露老労弄郎朗浪廊楼漏籠六録麓論和話賄脇惑枠湾腕!”#$%&’()*+,-./:;<=>?@[]^_`{|}~。・〜°—、「」『』【】゛》《〉〈£€¥¢฿ |

korean |

11237 |

0123456789가각갂갃간갅갆갇갈갉갊틹틺틻틼틽틾틿팀폨폩…흿힀힁힂힃힄힅힆힇히힉힊힋힌힍힎힏힐힑힒힓힔힕힖힗힘힙힚힛힜힝힞힟힠힡힢힣!”#$%&’()*+,-./:;<=>?@[]^_`{|}~。・〜°—、「」『』【】゛》《〉〈£€¥¢฿₩ |

simplified_chinese |

6656 |

0123456789㐀㐁㐂㐃㐄㐅㐆㐇㐈㐉㐊㐋㐌㐍㐎㐏㐐㐑㐒㐓㐔㐕㐖㐗㐘㐙㐚…䶮䶯䶰䶱䶲䶳䶴䶵䶶䶷䶸䶹䶺䶻䶼䶽䶾䶿!”#$%&’()*+,-./:;<=>?@[]^_`{|}~。・〜°—、「」『』【】゛》《〉〈£€¥¢฿ |

multilingual |

726 |

0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ!”#$%&’()*+,-./:;<=>?@[]^_`{|}~°£€¥¢฿çëÇËèïîôûêÈÏÎÔÛÊəğöşüƏĞÖŞÜ₼ñÑčćđšžČĆĐŠŽàéíòóúÀÉÍÒÓÚáďěňřťůýÁĎĚŇŘŤŮÝæøåÆØÅõäÕÄĉĝĥĵŝŭĈĜĤĴŜŬ₷âùÂÙƒƑßẞɓɗƙƴƁƊƘƳ₦ðþÐÞìÌāēģīķļņūĀĒĢĪĶĻŅŪąęėįųĄĘĖĮŲōŌċġħżĊĠĦŻźŚŹłńśŁŃãÃășțĂȘȚľĺŕĽĹŔ¡¿₱ıİ₺ɉɈảạắằẳẵặấầẩẫậẻẽẹếềểễệỏọốồổộỗơớờởợỡủũụưứừửữựỉĩịỳỷỹỵẢẠẮẰẲẴẶẤẦẨẪẬẺẼẸẾỀỂỄỆỎỌỐỒỔỘỖƠỚỜỞỢỠỦŨỤƯỨỪỬỮỰỈĨỊỲỶỸỴ₫ŵŷŴŶṣṢ§абвгдежзийклмнопрстуфхцчшщьюяАБВГДЕЖЗИЙКЛМНОПРСТУФХЦЧШЩЬЮЯёыэЁЫЭъЪ₽ўЎґіїєҐІЇЄ₴ӘәҖҗҢңӨөҮүҒғҚқҲҳҶҷӢӣӮӯҰұҺһ₸ЃѓЅѕЈјЉљЊњЌќЏџ᠐᠑᠒᠓᠔᠕᠖᠗᠘᠙₮ҔҕҤҥЂђЋћαβγδεζηθικλμνξοπρστςυφχψωΑΒΓΔΕΖΗΘΙΚΛΜΝΞΟΠΡΣΤΥΦΧΨΩάέήίϊΐόύϋΰώΆΈΉΊΪΌΎΫΏאבגדהוזחטיךכלםמןנסעףפץצקרשתְֱֲֳִֵֶַָׇֹֺֻֽ־ֿ׀ׁׂ׃ׅׄ׆׳״֑֖֛֢֣֤֥֦֧֪֚֭֮֒֓֔֕֗֘֙֜֝֞֟֠֡֨֩֫֬֯ׯװױײיִﬞײַﬠﬡﬢﬣﬤﬥﬦﬧﬨ﬩שׁשׂשּׁשּׂאַאָאּבּגּדּהּוּזּטּיּךּכּלּמּנּסּףּפּצּקּרּשּתּוֹבֿכֿפֿﭏ₪ |